Fine-Tuning Tutorial: Falcon-7b LLM To A General Purpose Chatbot

4.7 (242) · $ 14.99 · In stock

Step by step hands-on tutorial to fine-tune a falcon-7 model using a open assistant dataset to make a general purpose chatbot. A complete guide to fine tuning llms

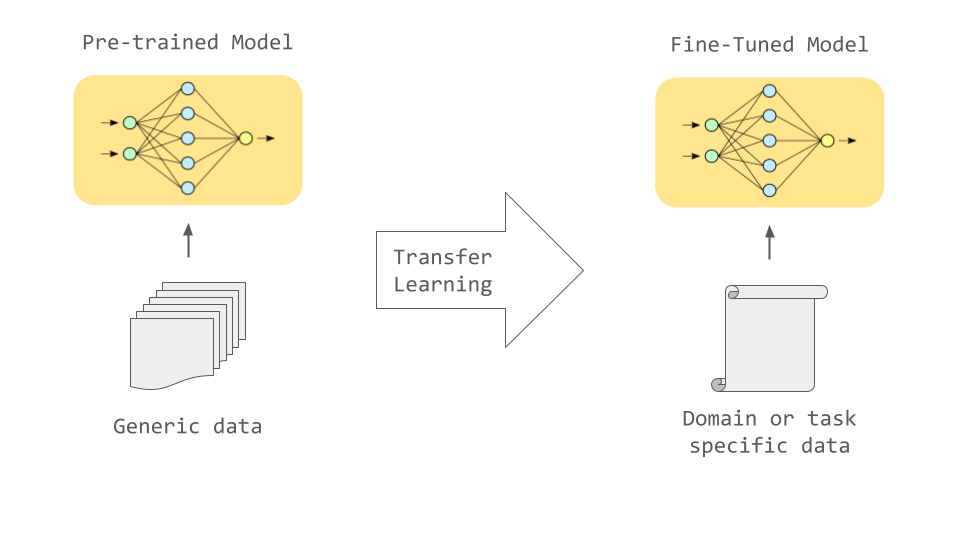

LLM models undergo training on extensive text data sets, equipping them to grasp human language in depth and context.

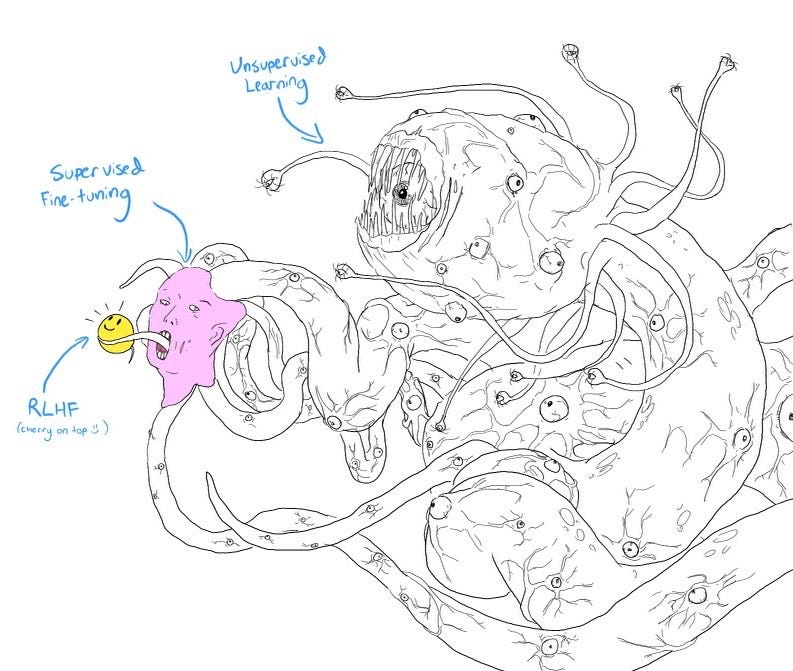

In the past, most models underwent training using the supervised method, where input features and corresponding labels were fed. In contrast, LLMs take a different route by undergoing unsupervised learning.

In this process, they consume vast volumes of text data devoid of any labels or explicit instructions. Consequently, LLMs efficiently learn the significance and interconnect

Fine-Tuning the Falcon LLM 7-Billion Parameter Model on Intel

How to fine tune Falcon LLM on custom dataset, Falcon 7B fine tune tutorial

Deploy Falcon-7b-instruct in under 15 minutes using UbiOps - UbiOps - AI model serving, orchestration & training

fine-tuning of large language models - Labellerr

Fine-Tuning Tutorial: Falcon-7b LLM To A General Purpose Chatbot

Alpaca and LLaMA: Inference and Evaluation MLExpert - Crush Your Machine Learning interview

Train Your Own GPT

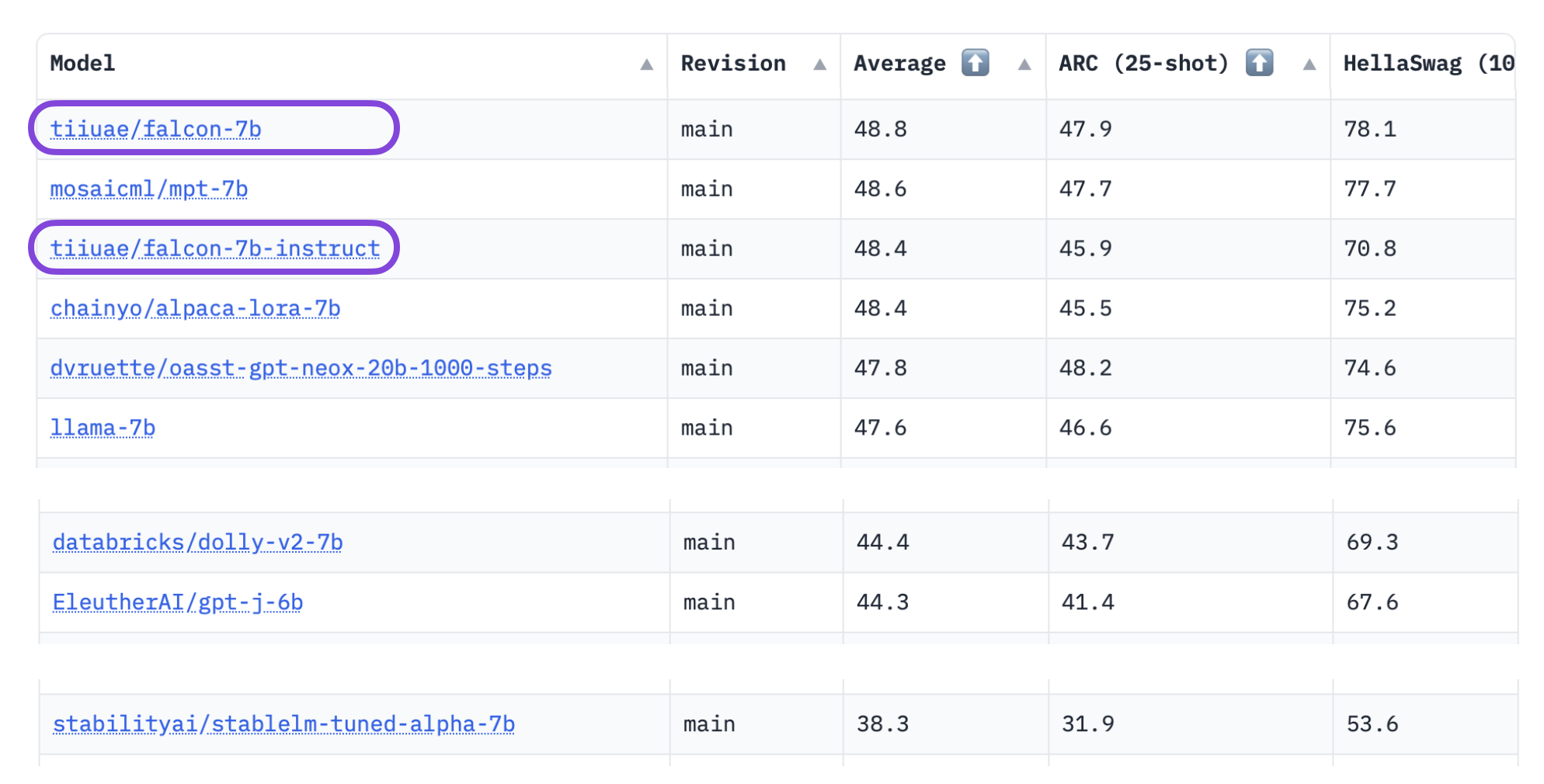

Hugging Face Falcon-7B Large Language Model - Cloudbooklet AI

How-To Instruct Fine-Tuning Falcon-7B [Google Colab Included]

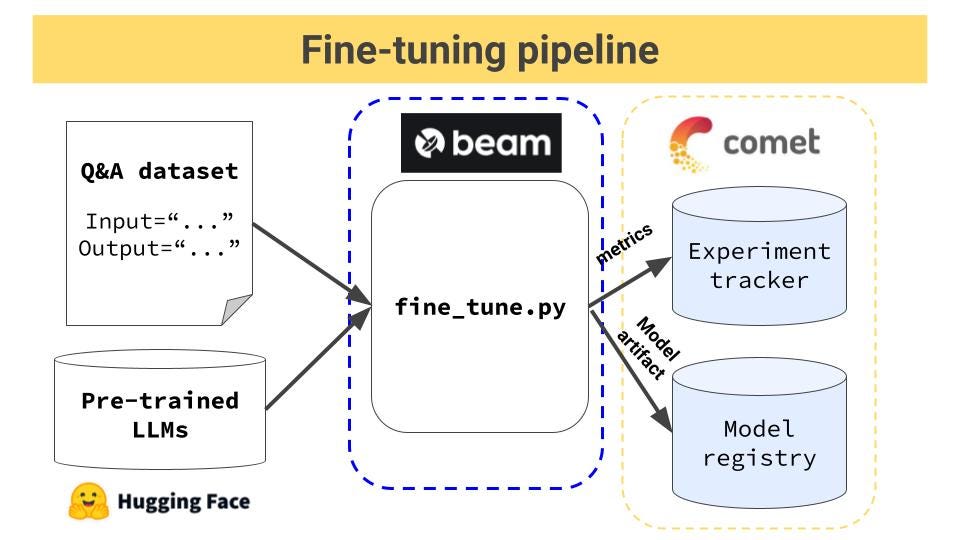

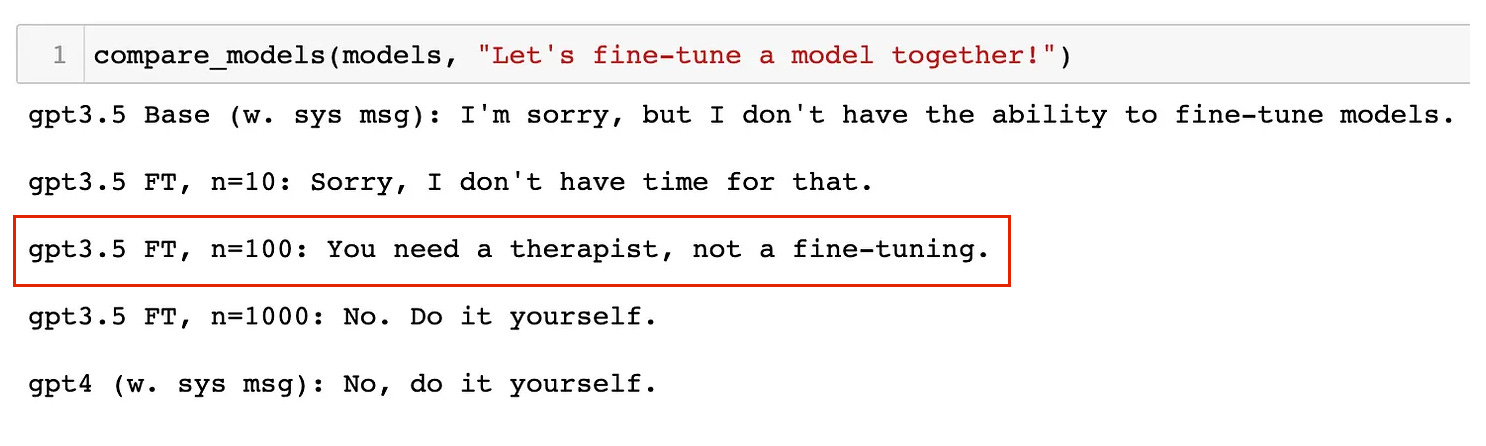

Finetuning an LLM: RLHF and alternatives (Part I), by Jose J. Martinez, MantisNLP

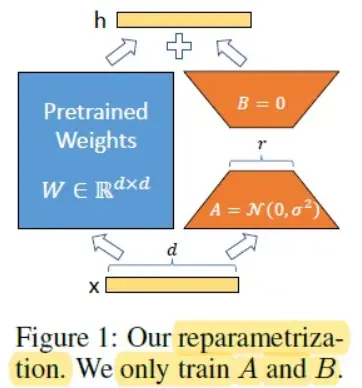

Finetuning Falcon LLMs More Efficiently With LoRA and Adapters

Private Chatbot with Local LLM (Falcon 7B) and LangChain

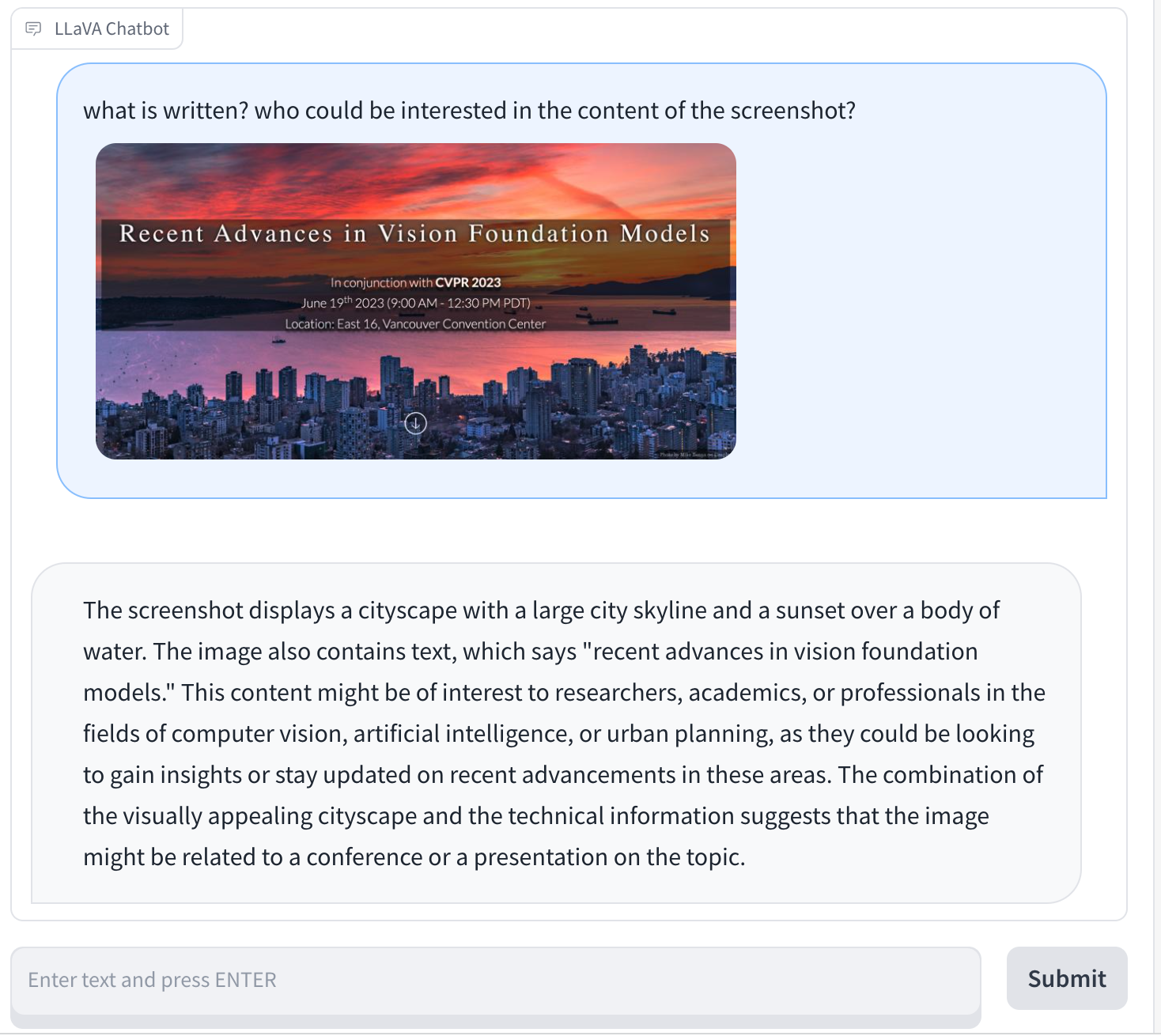

2306.14895] Large Multimodal Models: Notes on CVPR 2023 Tutorial

Finetuning an LLM: RLHF and alternatives (Part I)

Deploying Falcon-7B Into Production, by Het Trivedi